Asimov's Three Laws of Robotics

Plus, the Three Laws of Human-AI Machine Collaboration

“The Machine is only a tool after all, which can help humanity progress faster by taking some of the burdens of calculations and interpretations off its back. The task of the human brain remains what it has always been; that of discovering new data to be analyzed, and of devising new concepts to be tested.”

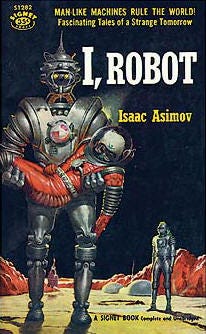

– Isaac Asimov, I, Robot

As you probably have seen in my previous works, I am a great admirer of the works of Isaac Asimov. Asimov was a science fiction writer and professor of biochemistry at Boston University. His writings about robotics and the interactions between humans and technology began in the 1940s and continued until his death in 1992 (and even afterward, as some of his works were published in 1993.

The Three Laws of Robotics, presented below, were first featured in one of his earliest short stories regarding robots, “Runaround,” in which a robot breaks down because it cannot reconcile the Second and Third Laws. This began a series of short stories that were compiled in a novelette, I, Robot. Below is the first presentation of these laws:

Handbook of Robotics, 56th edition, 2058 A.D.:

The First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

The Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

The Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The three laws of robotics, and the “Runaround” story, were credited by Marvin Minsky as the inspiration for his work in artificial intelligence (New York Times, 1992).

Toward the end of his life, Asimov created what he called

The Zeroth Law: A robot may not harm humanity, or, through inaction, allow humanity to come to harm.

Generative artificial intelligence as it currently exists is a far cry from the positronic brains of Asimovean robots, but that does not stop us from considering how these principles could be applied to the technology.

I assumed when I started to write this post that I was one of the first to consider how the Three Laws would be applied and adapted to robotics, but I as wrong! As early as 2006, Lee McCauley examined the idea with various AI researchers in a paper entitled "AI Armageddon and the Three Laws of Robotics.” Essentially, what the researchers and he discussed was that applying these rules directly to 2006 AI machines was impracticable and, in some cases, impossible and harmful.

Various authors since then have considered the laws and their futility for other reasons, especially in consideration of the new “generative” branch of AI on which this blog is focused. An alarming number of these authors dismiss the laws out of hand. They argue that, since generative AI machines cannot reason, we should ignore them, or at least only consider them partially.

As Asimov wrote in his second collection of his robot stories, “Knowledge has its dangers, yes, but is the response to be a retreat from knowledge? Or is knowledge to be used as itself a barrier to the dangers it brings?” (Asimov, 1964, p. 10).

One of the most frequent real-world faults of the Three Laws of Asimov is that they assume that the robots are rational, even possessing almost omniscient knowledge about the consequence chains of their actions. Of course, generative AI tools do not have that reasoning power (no machines do at the moment).

This difference between science and science fiction underscores the need for deliberate human oversight, the need to “collaborate” with AI rather than “automate” through dictating and leaving it alone. We need to “bring the human” to human-AI interactions. Without our reasoning, the AI will not be productive and will not follow the spirit of the Three Laws.

Ajay Varma does an excellent job at examining the first two Laws, but he takes a slightly different tactic than I do. He focuses on the generative AI tools themselves as the entity that makes decisions, and then dismisses the Third Law because AI cannot commit actions toward self-preservation. A perfectly valid thing to do wit his perspective. However, if we think of AI tools as tools, then they are not making their own decisions, as the robots in Asimov’s stories do. Who is deciding? Humans.

Of course, as one insightful poster on LinkedIn stated, human intelligence has been used to create many evil and regrettable products and events. Where there is potential for great good, there is also potential that the knowledge and skills we have can be used for evil. As Milton stated in Paradise Lost, “within himself the danger lies, yet within his power.”

Three Laws for Humans Using AI Machines

I think that using Asimov’s Three Laws as general guidelines, as they now stand, is just fine for forming AI tools and using them. Users need to remember that as they are the brain for the “robot” following the laws, they need to consider the laws as being applicable to them. Their prompts and objectives must adhere to the laws in order for the machine to follow the laws, as Martin Lee of Cisco found out during an anecdotal test.

When you rewrite Asimov’s Laws in consideration of the abilities of generative AI, the responsibilities of human users, the result is:

The Three Laws of Human-AI Machine Collaboration

The First Law: A human may not use generative AI to injure a human being or, through inaction, allow a human being to come to harm.

The Second Law: A human may use generative AI tools for any objective they desire, except where such objectives would conflict with the First Law.

The Third Law: A human must engage in good-faith, literate collaboration with AI tools in every interaction as long as such collaboration does not conflict with the First or Second Law.

Zeroth Law: A human may not use generative AI to injure humanity or, through inaction, allow humanity to come to harm.

Note that there are analogues for all four of the three laws above. I referred to them as Three Laws as a callback to Asimov, and because the Zeroth Law supersedes, or at least gives context to, the First.

Much like the original laws in Asimov’s stories, this will not result in perfect outputs or completely ethical products without human intervention. One need only read “Liar!” or “Little Lost Robot” to see how humans need to be “in the loop” for every collaboration.

Furthermore, as the Third AI Law says, humans need “good-faith” efforts in these collaborations. This means that they need to have AI literacy, understanding what AI can and cannot do. Otherwise, we get caught up on the fact that generative AI tools cannot spell the word “strawberry.” There is a perfectly reasonable explanation for this (because it was not built to understand discrete words), but if we do not understand how and why an AI tool was made, we may either 1) discount it, or 2) misuse it.

The result of following the first three laws is, hopefully, the fulfillment of the Zeroth: humanity, and human intelligence, will be the driving force behind generative AI use. We will keep ourselves, our objectives, and humanity’s best interests, in mind.

Let me know your thoughts about these ideas! In a future post, I will examine the concept of “artificial intimacy,” “artificial companionship,” or “programmed rapport,” and how this can blur the lines between humanity and machine, and can cause us to forget the Laws of Human-AI Machine Collaboration.

References

Asimov, I. (May 1942). “Runaround.” Astounding Science Fiction.

Asimov, I. (1964). “Introduction.” The Rest of the Robots. Doubleday.

Asimov, I. (1985), Robots and Empire. Doubleday.

New York Times. (12 April, 1992). “Technology; A Celebration of Isaac Asimov.” https://www.nytimes.com/1992/04/12/business/technology-a-celebration-of-isaac-asimov.html?pagewanted=all&src=pm.