BoodleBox Privacy and Security

Both Third-Party Agreements and Internal Data Collection Practices

REALLY Quick Plug

Today (July 8, 2025) I am going to talk about privacy and other ethical issues with AI in the Library 2.0 Workshop: “Creating an AI Ethical Framework.” The price is $129/person with some special pricing for groups.

I hope that we all have a productive and engaging conversation about the ethical issues surrounding AI and how we can respond to them in our practice!

Introduction

In my last post, I introduced you to BoodleBox. I mentioned that there are multiple benefits of BoodleBox for education and the workplace, and briefly mentioned that BoodleBox has excellent privacy policies and practices.

This post explains more about how BoodleBox interacts with third parties, the assessments and certifications they possess, and, more important, how their internal data collection ensures that your personal data is not only not passed back, but is not collected in the first place! These features make it one of the best, if not the best, AI tools for education in the K-12 space, as well as higher education.

Privacy

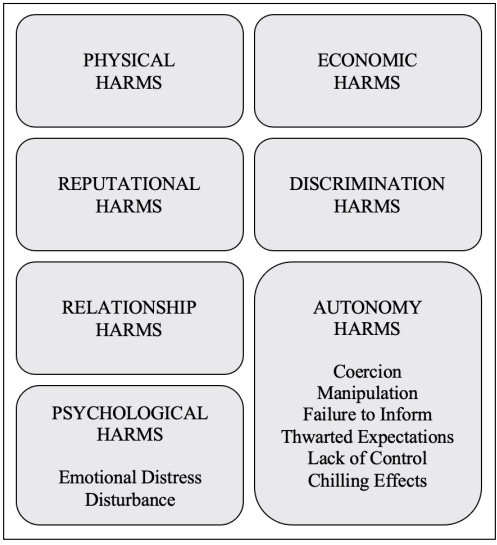

Privacy and security may seem to be the same exact thing, but they are not. Privacy is related to one’s personal data as well as the potential harms that could occur when that personal data is misused. For more information about potential harms from privacy violation, refer to the image below and the document from which it comes, Privacy Harms by Danielle Keats-Citron and Daniel Solove. All of these are harms that could happen if someone misuses our personal data, and all of these are prevented by the efforts, procedures, and services of BoodleBox.

NYT vs. OpenAI

Privacy has been at the forefront of BoodleBox since it was created in 2023. They quickly became certified as FERPA compliant, and from the beginning anonymized all personal data that was passed to model providers. They also have API contracts with model providers that prevents any model from training on user data and prompts.

This was especially important in June 2025, when a federal judge ordered ChatGPT to surrender all chats from all users over to the federal government, regardless of privacy agreements or user preferences. This federal order superseded all business agreements. This meant that all user chats that were unmediated (like mine!) or had non-privacy-minded API agreements were given verbatim to the federal government for review. Even if you went to a third party to “call” the API, your chat as recorded with ChatGPT was released!

BoodleBox Privacy Maintenance Practices

But what happened to BoodleBox?

Their chats were not touched at all, for two reasons:

BoodleBox accesses OpenAI models exclusively through Enterprise API accounts, which are specifically excluded from the court's preservation order.

Beyond this exemption, they take the additional step of anonymizing all prompts before they even reach model providers.

Furthermore, BoodleBox’s internal policies and procedures added further protections:

No third-party apps of any kind (including ChatGPT plugins or tools) are allowed to interface with or access BoodleBox data.

BoodleBox does not train their internal models on user data, including chats and knowledge documents. Instead, they use complex prompts to optimize the functions of these Custom Bots. The only time your data is used is when you volunteer it or select it from the Knowledge Base!

BoodleBox reduces the tokenization of inputs by 96%, so even before anonymization, the amount of data passed back to the API is significantly reduced.

BoodleBox Privacy Policy

Practices and assurances are good things, but how are those codified internally? Here are the most salient points of BoodleBox’s Privacy Policy:

Choices and features you make on BoodleBox’s website are tracked in order to optimize your experience.

No third-party data from cookies is transmitted to BoodleBox, nor do they pass the data they track to third parties.

BoodleBox pays especial attention to security and fraud prevention on your behalf.

During sessions, BoodleBox collects user communication preferences, prompts, usage data, and device and location data (primarily IP and device data that is collected anyway).

In addition to personal communications and troubleshooting, BoodleBox uses your information for marketing and identifying usage trends.

Data deletion or information about your own data that has been collected can be procured by contacting info@boodle.ai.

Certifications

Okay, so BoodleBox has good practices and a privacy policy. Whoop-dee-doo! How is that verified? Anyone can say that they “anonymize,” but how do we know exactly how far and thorough that anonymization is? Well, that is why certifications exist. Below is a timeline of certifications and developments in BoodleBox privacy.

2023: BoodleBox initiates FERPA compliance

FERPA: Family Educational and Privacy Rights Act, signed 1974.

Applies to any public or private elementary, secondary, or post-secondary school, any education agency that receives federal funds from the Department of Education

Students have the right to inspect and review education records and halt release of personally identifiable information

Students can obtain a copy of their educational records

Other parties are prohibited from accessing any of the above records without the written consent of the student or representative.

From the very beginning, BoodleBox committed to be FERPA compliant. They anonymized all user data and entered into Enterprise API contracts that specifically excluded passback of user data to model developers.

BoodleBox Terms of Service explicitly states that it follows all regulations expected of a “school official,” and will only disclose education records as directed or expected by the educational institution or the student user.

16 June 2025: BoodleBox becomes HIPAA, GDPR and SOC2 Type II compliant!

HIPAA: The Health Insurance Portability and Accountability Act, which became active in 1996.

This law is why your nurse cannot talk on the phone with your friends about your soccer injury without your say-so.

In more important terms, it means that third parties cannot take advantage of medical knowledge, whether for liability absolvement or financial prospects.

Elements covered by HIPAA include health plans, healthcare providers, personally identifiable information, past, present, or future physical or mental health conditions, and health-related payment information.

Being HIPAA compliant means that BoodleBox takes the time to remove all health-related information from chats it passes back to third-party providers, and that this practice has been recognized by the HHS as being satisfactory for certification.

GDPR: The General Data Protection Regulation. A law passed by the European Union, active in 2018, that was quickly heralded as the “toughest privacy and security law in the world.”

Honestly, GDPR compliance alone is an indicator that BoodleBox cares about privacy.

Entities that comply with the GDPR have to show that they acknowledge and protect peoples’:

Right to be informed

Right of access

Right to rectification

Right to erasure

Right to restrict processing

Right to data portability

Right to object

Rights in relation to automated decision making and profiling

Data covered by the GDPR includes data related to:

personally identifiable data,

location information,

ethnicity,

gender,

biometric data,

religious beliefs,

web cookies that are tracking the user, and

political opinions.

BoodleBox has to do more than just show that they are protecting these aspects of user data and their rights. They have to have certain structures and policies and practices in place, including:

Data controller: a person at BoodleBox who:

tracks exactly how and when GDPR compliance is maintained

designates others in the organization who has specific data protection responsibilities

trains all staff on data protection policies

creates and manages Data Processing Agreements with all third parties (in this case, only the model providers)

Verifiable technical and organizational measures, including two-factor authentication, end-to-end encryption, data privacy policies, limiting access to personal data, etc.

In every instance of using user data, even internally, they have to:

Document the justification for processing user data

Notify all users whose data is being used and receive consent, which is done in this Data Processing Addendum.

Notify users about any developments or changes in the data use plan.

SOC2 Type II: The System and Organization Controls Reports, 2, developed by the American Institute of Certified Public Accounts in 2010. a

This, in contrast with other certifications, comes from a private entity, which demonstrates that BoodleBox is gaining the confidence of both public and private organizations.

SOC evaluates the data protection and use controls at a “service organization”

Whereas GDPR focuses on the structure and policies of an institution, SOC2 focuses on preparedness for certain risks in:

The organization

The system through which the organization provides services to users

The nature of the services themselves.

Security

Security is different than privacy, and it has to do with the sharing and maintenance of data and documents you upload to BoodleBox. For example, if you upload a document to your personal Knowledge Bank, it is not visible to your students or your educators unless you explicitly share it with certain users or groups.

Security has to do more with confidentiality and preventing plagiarism or harming business or intellectual property rights. BoodleBox protects file and prompt security in the following ways:

Uploaded documents are encrypted stored on Amazon Web Services.

BoodleBox practices both encryption in transit and at rest, which means that during and after file uploading or sharing with other users, Knowledge Bank files are encrypted.

BoodleBox uses access controls for documents, chats, and groups.

BoodleBox undergoes regular security audits (in fact, they’re required by an EU DPA) and penetration testing (think “corporate hacking”) and regularly manages risks, both internally and with vendors, such as their data subprocessors.

BoodleBox enables granular access that can be administered by group leaders or educators.

Similarly, BoodleBox engages with all user data according to the data of “Least Privilege Access”: if you do not absolutely need an aspect of a user’s data to help them, you do not have access to it. This is managed quarterly, with access being given and removed based on need for particular projects or roles.

After thorough background checks, BoodleBox employees undergo regular Security Awareness Training.

Any Knowledge Bank documents are parsed, and only a small portion, only those parts considered relevant, are anonymized and then passed back to the API. Anonymization and parsing preemptively prevents model providers from using these documents for model training.

BoodleBox Direct Data Collection

Whereas other education-centered AI mediators insist on a wide range of data from users in order to build a thorough profile on them, BoodleBox only collects:

user name and passwords

email address

payment information,

platform usage statistics,

further information only as voluntarily given in a formal survey.

In micro and macro, BoodleBox practices Least Privilege Access. If none of their individual employees need data about you, why would the organization at large?

Implications for K-12

BoodleBox's FERPA compliance makes it an ideal platform for K-12 environments where student data protection is paramount. The platform's commitment to following regulations expected of a "school official" means that administrators and teachers can trust that student interactions with AI will remain confidential and protected. This allows schools to implement the Technology Consumer or Producer (TCoP) Model that Curry et al. developed for technology integration in educational contexts as young as elementary school if desired.

Furthermore, BoodleBox's granular access controls enable teachers to monitor student AI use appropriately without compromising privacy. This balance of oversight and protection creates a safe environment for students to develop critical AI literacy skills that will be essential for their future careers. As I've argued in the past, "the workplace will expect them to use these tools," and BoodleBox provides the secure framework for students to gain this experience ethically.

As you will read below, use of BoodleBox by children should be heavily restricted, and educators should conscientiously act as the “parents” of the children in this circumstance. They should have informed consent, approval, and authorization from parents (and, theoretically, from administration).

NOTE: Use of BoodleBox by minors on their own cognizance is strictly prohibited. Minors must use BoodleBox on an account created by their parent or guardians, and no other adult. Their Privacy Policy states,” we do not knowingly solicit data from or market to children under 18 years of age. By using the Services, you represent that you are at least 18 or that you are the parent or guardian of such a minor and consent to such minor dependent’s use of the Services (emphasis and italics my own).” All data inadvertently collected (by minors not following this policy) is promptly deleted as soon as it is discovered.

Cultivating Consistency from the Course to the Company

My First Presentation of the AECT 2024 International Convention

Implications for Higher Education

In higher education, concerns about AI often center on academic integrity and intellectual property. BoodleBox addresses these concerns through its robust security measures and commitment to preventing plagiarism.

BoodleBox's implementation of the "Least Privilege Access" principle is particularly valuable in higher education settings where research data may be sensitive. The platform's HIPAA compliance further ensures that health-related research information remains protected, opening possibilities for AI use in medical and health science programs. Nursing programs in particular have interest in using LLMs for case studies and for developing reports, and so BoodleBox would be an excellent candidate for that use.

The platform's approach to knowledge management—where documents are encrypted both in transit and at rest—provides the security necessary for scholarly work. This allows faculty and students to collaborate with AI on research without compromising intellectual property or confidentiality. The key is bringing the "human" to "human-machine interactions," and BoodleBox's security framework enables this collaboration with additional human participants while maintaining appropriate boundaries.

Implications for the Workplace

For workplace environments, BoodleBox offers a solution to what I identified in "AI Feasibility" as a major concern: "data privacy and confidentiality is especially important when considering generative AI tools, because the data you put or imply in your prompts can never be removed from the data harvested by the tool." I talked in that post about open AI tools as a potential solution. BoodleBox's approach to anonymization and data minimization directly addresses this concern, and I am happy to add BoodleBox to my list of “feasible AI tools.”

AI Feasibility (and How Open AI Tools and Workflows Affect It)

NOTE: Anyone who knows me will know that I have absolutely no filter when it comes to writing or saying what I think. I write things that are in their embryonic stages, and these might not be perfect representations of my ideas. I do not mean to offend anyone, and these posts are not meant to cast judgment. I am just thinking through typing. If I critiq…

The platform's SOC2 Type II compliance is particularly relevant for businesses, as it demonstrates preparedness for organizational risks. This certification, coming from a private entity, signals to businesses that BoodleBox meets industry standards for data protection and security.

BoodleBox's approach to CollaborAItion provides a framework for ethical AI use in professional settings. The platform's security measures enable users to partner witth AI and humans at the same time while maintaining appropriate data protections. This balance is crucial for businesses that need to leverage AI capabilities without exposing sensitive information.

Review For Yourself: Trust Center

Not two weeks ago, France Hoang, the CEO and cofounder of BoodleBox, announced the BoodleBox Trust Center, which is essentially about “all things privacy and security.” The Trust Center reviews all of the measures taken by BoodleBox and provides access to the analysis and reports of auditing organizations that viewed those measures.

Conclusion

BoodleBox stands out in the AI education landscape precisely because it has addressed the privacy and security concerns that have made many institutions hesitant to adopt AI tools. By implementing robust protections—from FERPA, HIPAA, and GDPR compliance to encryption and access controls—BoodleBox has created an environment where the benefits of AI can be realized without the typical risks.

As I've consistently advocated across my work, particularly in “Navigating Benefits and Concerns When Discussing GenAI Tools with Faculty and Staff”, the answer to AI concerns is not prohibition but rather actively and publicly modeling ethical and effective genAI tool use. BoodleBox provides the secure infrastructure for this approach, allowing educators, students, and professionals to develop AI literacy and collaboration skills in a protected environment.

The platform's commitment to "Least Privilege Access" at both micro and macro levels demonstrates an understanding that privacy is not just about policies but about fundamental respect for user autonomy. In an era where data has become a commodity, BoodleBox's approach represents a refreshing alternative—one that prioritizes user privacy while still enabling powerful AI capabilities.

For K-12, higher education, and workplace settings, BoodleBox offers a path forward that balances communication with protection, allowing us to embrace the benefits of AI without compromising our privacy and security.