On Thursday I had the excellent experience of guest lecturing for Dr. Jeanne Beatrix Law, Director of Composition at Kennesaw State University. Her course on Prompt Engineering, which was a section of a Professional Writing course, discusses the most beneficial, effective, and ethical uses of generative AI tools in communication and education contexts.

Dr. Law invited me to discuss my use cases with the class as a type of demonstration of what the tools can do, for students and professionals alike. And I did do this, but I did it in the context of a larger concept. We talked about:

the most important tasks, problems, and products they needed to complete

the use cases that were most applicable for these purposes, and

how they could iterate and improve the outputs of the AI tools to come up with the most complete product.

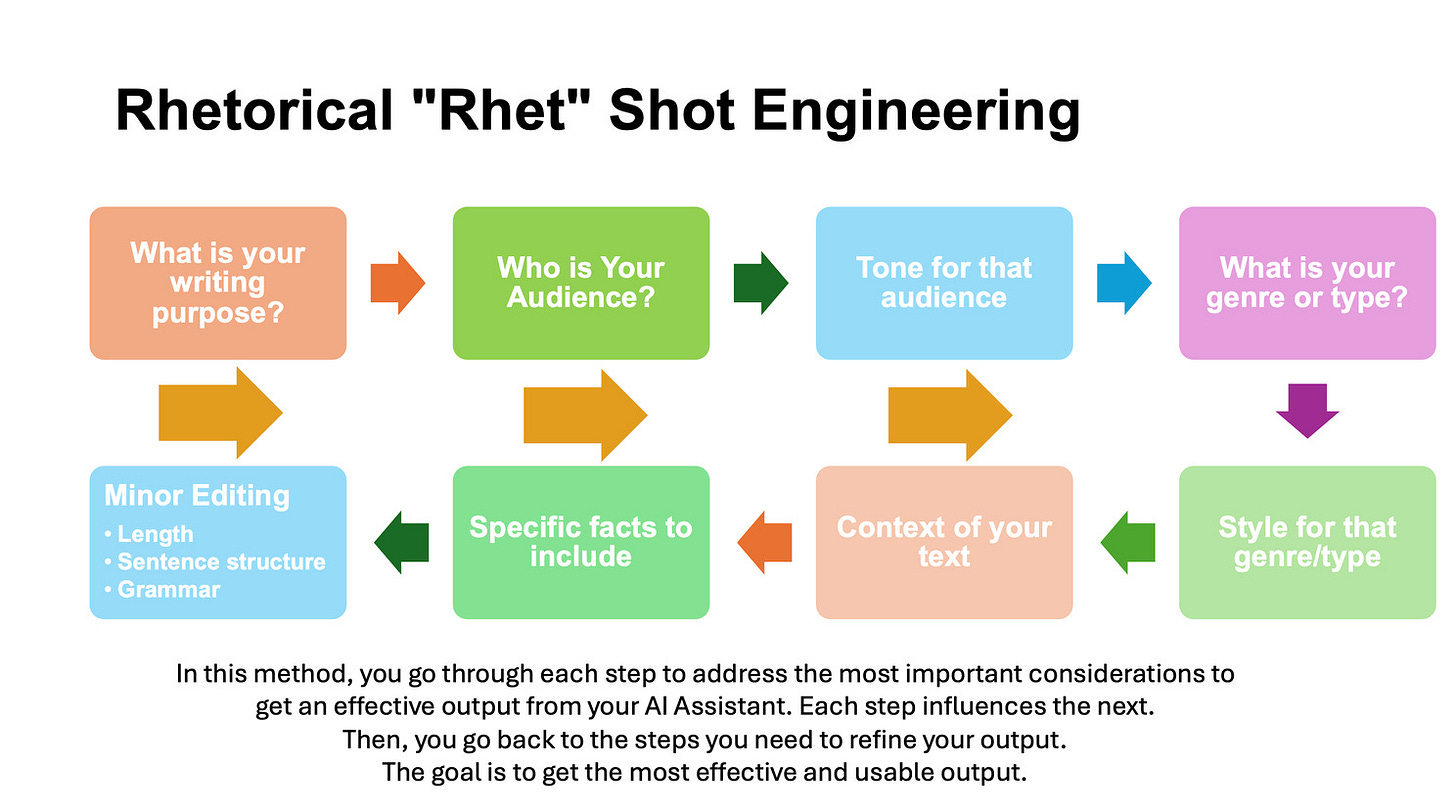

Through this discussion, we learned what I believe are three of the central ideas when one uses the Rhetoric Framework and the COSTAR Framework:

When you have a conversation with an AI tool to create or fulfill anything, you have to understand what you are doing and why you are doing it. Focusing on the goals, as you can through the Rhetoric Framework, can help you understand the data and the steps you need to communicate to the AI tool.

The most complete and accurate phrase for interacting with an AI tool might not be “prompt engineering” but “conversation structuring,” “conversation steering,” or “conversation-ing” as one student put it. Personally, I prefer to put as much information as possible in the initial prompt, through the COSTAR Framework or at least through a structured prompt. But the exact nature of every prompt does not need to follow a certain structure. What is important is the ability to keep the AI tool focused and give the necessary data and information. You can do that in multiple ways, as long as you yourself are focused.

This part is more apparent with the Rhetorical Framework, less so with the COSTAR Framework. The point of frameworks, and of the ideas behind “prompt engineering,” are less for the AI tool and more for the user. They influence the user to think through their purpose for collaborating with an AI tool.

As we talked about these main principles, we discussed that they could be applied for any use case in any type of conversation. In this way I suppose I went beyond the purpose of the class, but I felt that it was important. If we only use specialized and commercial tools, we will not be able to develop generalized AI collaboration skills. We will only know how to function with one type of tool, whose interactions may be mediated by commercial-provider defaults or options.

We also discussed the need for ethical guardrails, use guidelines, data privacy and confidentiality, and abiding by copyright and applicable data sharing laws. We went on slight tangents with these topics, but they were related. As they were more tangential, I do not remember exactly what we discussed (feel free to remind me).

As always, this was an excellent course and I learned a lot! I hope that I was able to facilitate learning for the students as well. I am grateful to Dr. Law for inviting me, and I am grateful to the students to bringing up excellent points.

The Plug

As this is a free blog, and as I have a consulting business that I run, I assume that you will forgive me for advertising briefly for a commercial function.

In coordination with Steve Hargadon of Learning Revolution, I am hosting a bootcamp on professional productivity with ChatGPT and other AI tools! You can register without feeling like you need to attend all three sessions, because recordings will be available forever afterward.

$149/person, $599-999/institutional license

For more information scan the QR code below, or go to this link:

https://www.learningrevolution.com/professional-productivity