What is “ChatGPT Search,” or “SearchGPT”?

SearchGPT refers to the integration of ChatGPT with web-browsing capabilities. This enhanced version of ChatGPT can access and retrieve real-time information from the internet. By combining the natural language capabilities of GPT models with live web access, SearchGPT provides a dynamic tool for research, problem-solving, and current data retrieval.

While traditional GPT models rely on pre-existing datasets up to a specific cut-off date, SearchGPT bridges this gap by pulling in up-to-date information. For users navigating rapidly evolving topics, this functionality is indispensable, as long as it is understood and used correctly.

How Does It Search?

SearchGPT uses targeted queries to explore the web based on user prompts. Upon receiving a question, it crafts search-engine-like queries to find relevant results. Once the results are retrieved, it analyzes and summarizes them in natural language. In other words, it uses natural-language processing to generate language to communicate the data and information it has found.

SearchGPT emphasizes precision and relevance. Unlike general search engines, it aims to provide synthesized answers rather than a list of links. However, users can request source citations to ensure transparency and enable further exploration of the original content. Personally, I request that it always gives me sources in APA format. Even if it does not “cite” sources, it does give the user two lists of links: the URLs of the sites it used to create its output, and related URLs that it crawled while finding the first list. This assists the user in lateral reading and research.

What Is the Output?

The output from SearchGPT is a cohesive and context-aware response that distills information from multiple sources. For instance, instead of offering a generic explanation of a topic, it integrates information on the web to craft a nuanced answer. Hallucinations, at least in my experience, are significantly less frequent when using ChatGPT Search. Even if a hallucination occurs, the user can check the sites used to create the output to verify the information (and they should be doing this anyway…).

Is It A Search Engine?

I hear this question all the time, about as much as the claim that “ChatGPT and Claude are made using the entire Web.”

No, SearchGPT is not a traditional search engine like Google or Bing. Instead, it acts as an intermediary, processing and interpreting search results into a conversational format. While search engines excel at cataloging vast amounts of content and presenting diverse results, SearchGPT focuses on curating specific, concise responses. It is best suited for users seeking synthesized information rather than raw search results.

How Do I Prompt ChatGPT to Search the Web?

To effectively use SearchGPT for web-based queries:

Be Specific: Provide detailed prompts to narrow down the scope of the search. For instance, instead of asking, “What is Baroque music?” you might ask, “What are the main aspects of Baroque music composition, interpretation, and performance?”

Do Not Treat It Like a Search Engine: ChatGPT Search does not use Boolean keywords. You can get results from using keywords by themselves, but I would advise sticking to sentences and paragraphs, simple or complex. I tried to frame prompts with various strings of Boolean keywords during my first few conversations to test it. It was abysmal.

Request, and Take Advantage of, Citations: If you need to validate the information, ask for source links or references. Look at all the links provided by ChatGPT Search and AT LEAST skim what they say.

Iterate: If the first answer is not satisfactory, refine your prompt or ask for additional perspectives. When the content is satisfactory, you can call CustomGPT tools or use general ChatGPT to format and utilize that content in new ways.

Should I Use It To Replace My Search Engine?

SearchGPT complements, rather than replaces, traditional search engines. While it is excellent for obtaining synthesized responses and analyzing trends, it may not always provide the exhaustive array of options or technical tools available through standard search engines. Consider it an augmentative tool.

I personally have ChatGPT Search as my “default search” tool. This is not because I think it is more useful than Google in terms of the act of searching. However, I do think that it is more reliable in terms of the links it finds. With Google and Bing, you have to sift through:

text-generated (non-RAG) answers that are often not reliable,

sponsored ads masquerading as search results,

SEO-optimized (hackneyed) results,

and then reliable links to high-quality sources.

When you query ChatGPT Search or Perplexity, you have to parse through information you get from the output, but at least you can look at the links. You do not have to worry if they are included in an output because they were paid for by sponsors. Search Engine Optimization may bias the output slightly, but enough of the legitimate sources should be present to show the consensus of authoritative sources (that may be wishful thinking), especially for users who exercise information literacy skills.

Should I Encourage Learners to Use It?

Yes, but with guidance. SearchGPT can enhance research and critical thinking by modeling how to synthesize and contextualize information. However, users must remain cautious about potential inaccuracies or biases in sources. Encouraging learners to cross-reference information and use SearchGPT as one component of their research strategy promotes information literacy.

How Can I Support Even-Handed Use of ChatGPT Search?

This relates to my general counsel about eschewing complete AI automation.

To ensure fair and effective use of SearchGPT:

Teach Information Literacy: Show users how to verify sources and recognize credible information.

Promote Transparency: Encourage acknowledgment of AI assistance in academic or professional outputs to foster ethical practices.

Balance Dependence: Urge users to develop their skills alongside AI tools to avoid over-reliance, ensuring their expertise grows in tandem with technology.

Promote CollaborAItion over AutomAItion: CollaborAItion is the concept of consistently and meaningfully interacting with AI tools and their outputs. AutomAItion, on the other hand, can lead to inaccuracy, privacy issues, diminution and atrophy of research skills, and (for some tasks) more work in the long run.

Emphasize Human-Centered AI Use: All users should “bring the human” to human-machine interactions, whether the machines are instruments or genAI tools. We must be active participants rather than passive consumers.

I expanded upon my original “piano-AI” analogy in a recent podcast episode with Zach Kinzler. This analogy is how I came up with the idea of “human-machine interaction” emphasis for this blog in the first place.

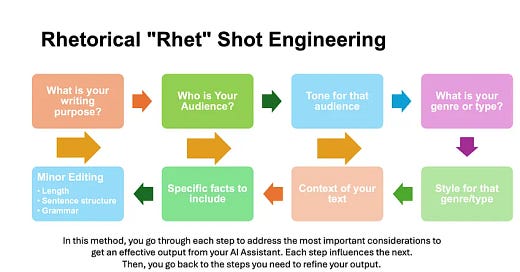

Use the Rhetorical Framework To Make a Plan: View the image below to see the fairly detailed and thorough process for planning out WHAT you need to make and HOW you should prompt your AI tools to make it.

Use the COSTAR Framework To Develop Prompts:

Context: Provide background information relevant to the task.

Objective: Define the specific goal or task for the LLM.

Style: Specify the desired writing style.

Tone: Set the attitude of the response.

Audience: Identify the intended recipients of the response.

Response: Specify the desired output format.

I connected the COSTAR and Rhetorical Frameworks in a brief post after an experience with Dr. Jeannette Beatrix Law’s class at Kennessaw State University.

If we trust an AI tool to completely automate any significant part of our work, we become more prone to AI-dependency. However, the threat to information literacy is important to more fields than just research. AI automation means that the end result is multiple-steps derived from the first response of the AI tool to a query. If we consume the output without looking at the data, the tool’s processes, and the implications of the output, we are not using AI ethically. We are not engaging in quality control, which is important in all fields.

Using the SIFT Method When Assessing ChatGPT Search Outputs

To ensure the legitimacy of AI outputs, one effective method is the SIFT Method by Michael Caulfield—Stop, Investigate the source, Find better coverage, and Trace claims to the original context. This process helps users to verify the accuracy of information and identify any potential misinformation. By adopting CollaborAItion, educators, instructional designers, librarians, and other professionals can harness the power of AI while maintaining a critical eye. Collaboration, rather than automation, ensures that the technology serves as a valuable tool rather than a replacement for human insight.

You do not have to rely on ChatGPT’s self-provided internet interface. You can use ChatGPT in tandem with Google or any other search engine. If we have all of the aspects of AI literacy we can navigate information with the aid of generative AI tools. I have created CustomGPTs for this purpose, and many of them can be found at libraryrobot.org. Check out the Search Query Optimizer, especially.

You can (and should, in my opinion) use ChatGPT Search and other AI tools to create Generative OERs and promote generative learning. It will be an excellent opportunity to help students learn for themselves (about how to use AI efficiently and about their chosen topic).

Walkthroughs

At the request of Dr. Denise Turley, I created brief walkthroughs of both iterations of ChatGPT Search.

Conclusion

ChatGPT Search represents a significant leap forward in how AI tools interact with the web. It provides an intuitive, conversational interface for accessing and synthesizing real-time information. While it is not a replacement for traditional search engines, its role in contextualizing and curating content makes it a valuable asset in education, research, and professional contexts. By fostering responsible use and coupling it with foundational information literacy skills, users can maximize its potential while safeguarding intellectual integrity.

Further Reading

ChatGPT Search and Perplexity have come a long way from the initial “web-enhanced conversations” that provided text generation without linking to sources. It was this type of conversation that Bender and Shah warned against in their presentation and paper “Situating Search” at the 2022 Conference on Human Information Interaction and Retrieval. I respect what Bender has said about multiple aspects of AI tools, but I have written elsewhere (and will write again) about my critique of her “stochastic parrot” argument.

In this paper, Bender states that people use search engines to “learn, explore, and make decisions.” She also states that they need more “support and guidance” in the information literacy process. This paper implies that while dialogue-based tools do not provide this type of information, search engines do. Furthermore, while Bender and Shah acknowledge that there are biases and other problems with search engines, they claim that these issues will only be worse with natural language processing.

To me, this paper suggests that Bender and Shah do not trust the public to exercise critical information literacy. I truly do not blame them. After all, there is a demonstrated decline in information literacy skills among junior high students. However, if educators and their students proactively engage in information literacy skills, they will be able to use AI tools, including ChatGPT Search, productively.

I'm curious why ethics is not mentioned in any of your tips or instructional suggestions. Shouldn't students at least consider a) the fact that Chat GPT was trained on words and art stolen from writers and artists, and b) the fact that there are distinct biases in material generated by Chat GPT? Shouldn't they know that when you enter something into Chat GPT to summarize or whatever, you've now given it to Open AI? Did the writer expressly give permission to use their material in this way; what does the copyright or Creative Commons license? It would be interesting for students to put themselves in others' shoes: when they've written their masterpiece, will they be ok to have AI take it, chop it up, and spit it out in what people now call "AI slop"?

If you care about the environment, shouldn't you consider how much energy was used to run the AI, so you could take a shortcut? If you care about workers and decolonization, shouldn't you consider the invisible "ghost" workers who labor in horrendous conditions so you can get a quick answer?

So many thought-provoking questions to consider before choosing to use generative AI tools?