Your Brain on BoodleBox

Rarely do I see academics and information professionals completely abandon the central principles of #infolit, except when they are engaging in pro- or anti-AI hype.

In this article, we are going to discuss a massive article on a moderate-sized study that had some significant impacts in how we perceive LLMs… and it’s only been a few days! Both pro- and anti-AI hypists have been “analyzing” the paper (and by analyzing, I mean putting it through a chatbot for a superficial summary).

Then, we’ll discuss media misrepresentations of the message of the study, its limitations, its actual message, and how we can apply its findings to our new favorite genAI tool, BoodleBox.

Initial Article: Your Brain on ChatGPT

At this point, I honestly do not think that I need to tell anyone what the participants did in this study—there have been countless posts and blog articles about it already. However, I like to establish my own ground so you will please bear with me.

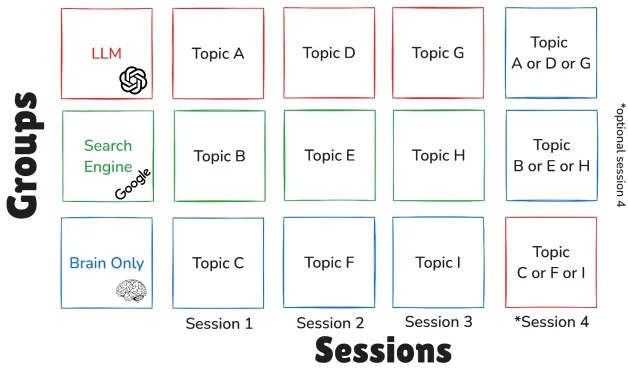

Visual diagram of the study, illustrating the different group and sessions. Pay attention to the top and bottom rows Source: MIT

Dr. Nataliya Kos’myna et al., of MIT, recruited 54 students from multiple higher education institutions in the Boston area. In both gender and age, there was remarkable diversity in the participants. All participants were assigned to one of three groups:

LLM - For the first three sessions, these students only used LLMs to write essays.

Search Engine - For the first three sessions, their only sessions, these students used search engines alone for help writing essays.

Brain - For the first three sessions, these students only used their brains to write an essay.

In the first three sessions, all students were given a series of SAT essay prompts and told to choose one of the prompts to write about. Each student was hooked up to an EEG machine and asked to write for twenty minutes. Then, their EEG readouts were examined and a survey was administered. Their essays were analyzed and assessed by educators and a specially-created AI “judge.” Students were also assessed on their ability or inability to quote from their own writing of only a few minutes ago.

In the fourth session, all variables were the same except for one thing: the LLM and Brain groups switched places—those who had used AI were expected to use their minds alone; those who had used their brains alone now had access to an AI assistant. Each of these students were asked to rewrite one of the essays they had written before, so they would have previous knowledge and experience to build upon. These students and their essays were again analyzed the same way.

EEG readouts examined the cognitive load and the cognitive engagement of students throughout the writing process. In the words of the authors, “the Brain‑only group exhibited the strongest, widest‑ranging networks, Search Engine group showed intermediate engagement, and LLM assistance elicited the weakest overall coupling.”

Context: Where Did The Phrase Come From?

The opening phrase of the paper comes from from a late-1980s PSA shock ad called “This Is Your Brain on Drugs,” as well as a 1997 remake in which Rachael Leigh Cook completely destroys a kitchen with a frying pan. The message was, “drugs are bad, they will destroy your mind and your entire life.”

With a title like that, it is not surprising that the media had such a negative reaction to the study. They were primed to see the negative aspects and elevate those over the positive message of the last sections. This is why you read things all the way through!

Engage with #InfoLit: Rejecting Incorrect Interpretations of the Article

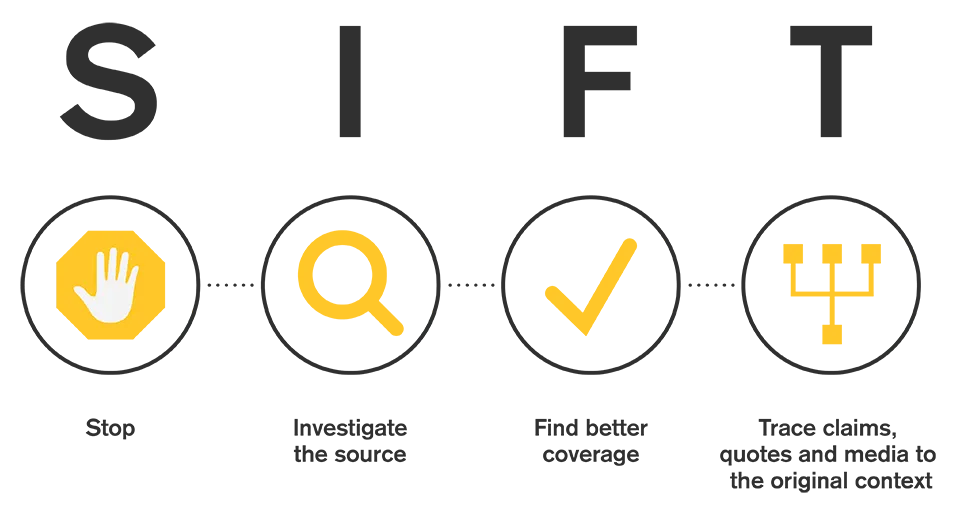

See if you can trace the elements of the SIFT Method throughout this post.

Reading is Important

The best thing to do is actually read the article itself. I cannot count the number of posts or articles about this study I have read that either:

cite another article about the study instead of the study itself

cite an AI-generated summary that they did in five seconds, not realizing that the authors created an AI trap that only granted them limited insights.

Take out the references and appendices and it's 145 pages. Take out figures, it's about 120 pages. That's a novella. That’s the “Experiment of Dr. Ox.” I read it in ninety minutes. If people can't read that much without running to AI for help, we have a major problem.

"Reed, I have a lot of stuff to do." Then read the abstract and the three-page summary at the beginning and the discussion at the end. Total of like 20 pages.

This is pages 1-4, 38-41, 117-121, 133-141.

Both pro- and anti-hype writers made significant errors in analyzing the article, and I will explain what their arguments were briefly to give context to my own interpretation and application.

Pro-Hype

Pro-hype readers were extremely quick to point out the limitations of the study, and the fact that it was unprecedented and not peer reviewed yet (that’s what pre-prints are…). However, these limitations were clearly and explicitly stated by the authors both inside and outside the paper. From their website:

“to the best of our knowledge, it is one of the first protocols, thus we do expect more papers/studies (from ourselves and other researchers!) with different protocols, populations, tasks, methodologies, that will add to the general understanding of the use of this technology in different aspects of our lives.”

The authors, at least the principal author, already has more projects related to this. For example, they have a study on “vibe coding” that they are currently conducting.

Another issue that pro-hype writers decry is that “essays are awful final assessments,” or “essays are meaningless,” which was not at all the topic of the paper. The issue was what amount of cognitive engagement was seen as they were writing, not the quality of the arguments; yes, quality was assessed, but that was for thoroughness, not for a major finding of the paper.

Anti-Hype

You can almost always tell anti-hype posts about anything, including this article, by the loaded language: “stupid,” “dumb,” “damage,” etc. They also referred to “brain scans” and other inaccurate ways of portraying EEG technology.

These articles, to which I will not link, typically tend to focus on the first two thirds of the paper while not at all examining the last third, which is where, firstly, Session 4 is discussed, and where the thorough Discussion and Limitations sections are located.

They say that the article “proves” that ChatGPT makes people dumber, or reduce their cognitive abilities. They completely ignore, it seems, the concept of human agency and motivation in the whole experience. The human makes the difference in this study.

Still others attacked the authors (never a good idea!) and implied that they had not thought through the study. One of my most respected colleagues (who, needless to say, I respect a bit less now) commented that the authors had cognitive deficits. I do not think that my colleague read any of the authors’ CVs…

Media Misrepresentations

Media reporting on this article happened almost immediately. As was expected, they almost completely agreed with my anti-hype colleagues. If academics used the words “dumb” or “stupid,” media used the words “horrifying” or “terrifying,” and added the TikTok-ism “brainrot” to the list.

The Real Point: Collaborative AI Benefits Writers

When it comes down to it, this study is really about cognitive processes, not about cognitive impacts of genAI. I do not know how people are getting the “ChatGPT fries neurons” angle. I imagine that it’s because there are images of EEG results, and they figure that negative impacts to the physical brain are what are being studied. As I discussed before, the title that was pulled from the War on Drugs definitely did not help public perception.

What Were they Actually Assessing?

We have to remember that there were no instructions given to the LLM users as to how they should use the tool. This means that they were not caring about that. They wanted to study that. Copy-paste is the default use, not Best Practices. They wanted to evaluate how people do use ChatGPT, not how they should. This is not a flaw in the study. It was crucial to understanding cognitive engagement.

The researchers in this paper were studying the cognitive engagement and cognitive processes initiated by writing (one example among multiple!) with a search engine, ChatGPT, or on one’s own intellect alone.

Session 4 on its own has significant insights that directly contradict both the pro- and anti-hype at once!

Brain-to-LLM Group

The real key here is the Brain-to-LLM Group, which, if you recall, spent the first three sessions writing using only their minds. Writers who started without AI and later adopted it showed (you’ll notice that all but one of these insights was “pull-able” from the table at the beginning, and thus we see the importance of reading the whole paper):

improved engagement and richer revision (121).

“better integration of content compared to previous Brain sessions (121)”

“Used [m]ore information seeking prompts [than people who started out with the LLMs] (139).”

“Scored mostly above average across all groups (3).”

“AI-supported re-engagement invoked high levels of cognitive integration, memory reactivation, and top-down control (139)”

In other words, they put in the writing effort beforehand and then and only then used the LLM, and they actually benefitted from the use of genAI. Granted, they were assigned to these groups, it was not as though they chose this. They were directed to do so.

On the other hand, the students who wrote with the LLM the first three sessions had a very difficult time writing on their own. They seemed to have become passive in their writing, depending on the AI tool. Their cognitive engagement was only slightly higher than it had been when they were writing with the LLM.

Thus…

This study is saying that overreliance on AI makes you less efficient and thorough, which is true. Actually, one of the conclusions of the study was that pre-writing and conscientious writing before using an AI tool significantly increased and broadened cognitive engagement (which I suppose the media would call "making your brain smarter?").

In the words of the lead author, the study “suggest[s] that strategic timing of AI tool introduction following initial self-driven effort may enhance engagement and neural integration.”

This was not a paper criticizing all use of AI in education. It was a study that cautioned against introducing LLMs as writing helps too early in the writing experience. In the words of Alberto Romero, it critiqued “the abuse of LLMs in education settings … This is your brain telling you what happens when you abuse ChatGPT.”

But Wait, What About…

Okay, there are quite the number of people who are insisting on looking at all of the limitations of the paper. Their zeal to assign the limitations to malfeasance or incompetence on the part of the authors is almost amusing. They clearly missed the page-and-a-half section at the very end. Limitations acknowledged in the paper include:

geographic area

background (large academic institutions)

ChatGPT was the only LLM used

only one modality present, so only writing, particularly essay writing, could be analyzed

writing tasks were analyzed in aggregate and not in discrete sections

EEG is not as powerful as fMRI

findings are not generalizable

study was not longitudinal

no way to compare writing in the moment with previous writing samples

This is quite a long list, and quite transparent. One of my colleagues who also read the paper mentioned that there was one more limitation, that “in writing, we assess essay ownership throughout the writing experience, not just at the end.” However, this is probably a domain-specific limitation and might not be necessary to add to future studies.

Conclusion: BoodleBox Facilitates Collaborative AI

So, how do we use “strategic timing of AI tool introduction” in the digital classroom? When we look at online education, or for others, when we go to the internet, how do we start writing without completely relying on AI?

BoodleBox has the answer, particularly when we are working in groups or when we deliberately want to shut off a conversation until we are read to collaborate again.

Mode Menu

In the chatbar of BoodleBox, there is a three-dot menu at the top right. When you click on it, you have an option to enter Bot Mode or Message Mode, as well as the Enable Knowledge to Context Mode. In the context of the implications of this study, to ensure optimal engagement and personal writing connection, one would:

Start with the Message Mode. This mode lets you send messages to others, or to yourself, and does not have the AI tool respond at all. This way, you can workshop outline or project details on your own before bringing an AI into the conversation, and you will not have to switch back and forth between different windows or programs.

Once you are done with that, go find or select some Knowledge Documents for your Knowledge Base. Find what information and sources you want to rely on.

Write our your outline or details and do some freewriting. Create a decent second or third draft, or write a fair amount of details into your outline.

Then, enter Bot Mode to have the AI talk to you. Notice that this is the fourth step in this process. Have it workshop the outline or examine your syntax or serve as an editor.

Click on the Enable Knowledge to Context Mode to incorporate documents and give the LLM more context. In this way, you will have 1.

your own words,

edited or rearranged by BoodleBox,

with documents and sources selected by you and suggested by BoodleBox.

You may not even do step 5 if you do not want. I personally prefer to incorporate documents and sources on my own. Incidentally, I feel more ownership over the final product when I do that.

Your Brain on BoodleBox Is Engaged

One of the first things that impressed me about BoodleBox was that it emphasizes and encourages collaborative AI, or “collaborAItion.” This is essentially what “Your Brain on ChatGPT” was trying to encourage—thoughtful, premeditated, deliberate genAI use in conversations in which the human user is in control and has clear objectives. …

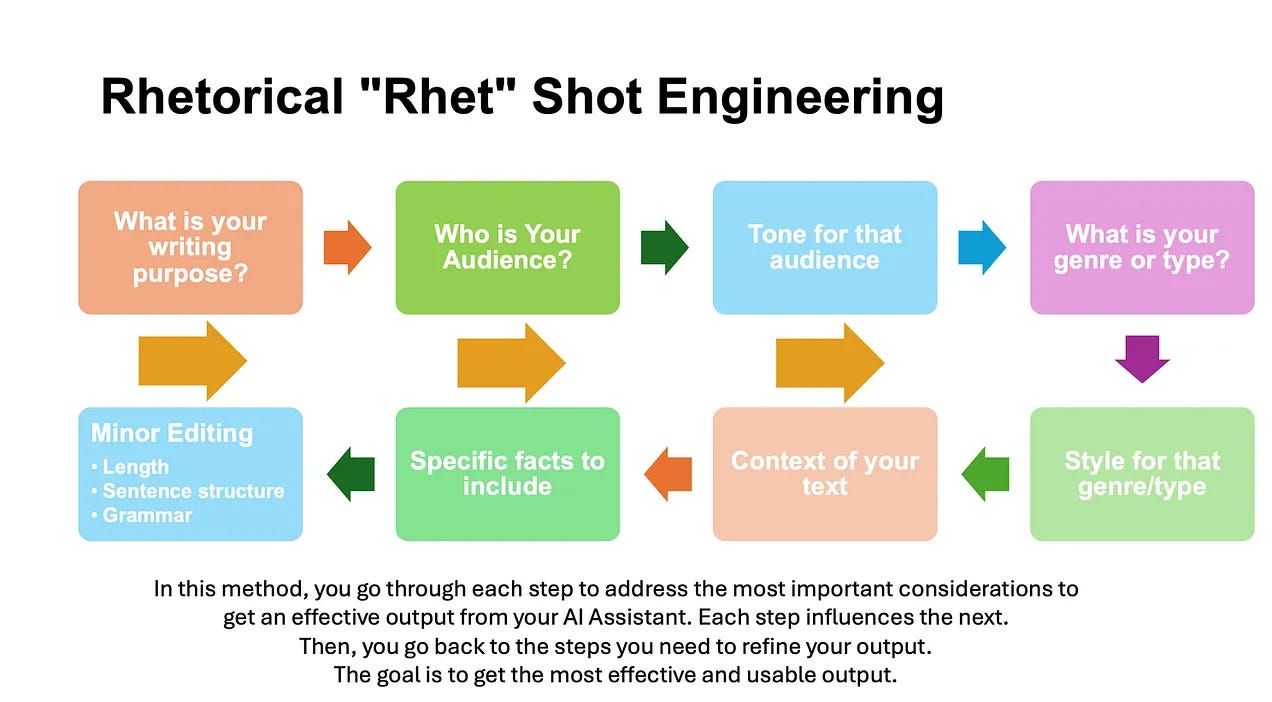

…which brings to mind

‘s Rhet Shot Framework… which I will apply to BoodleBox in our next post!